During 2020, Nick Murray and I were commissioned by The Quietus and Aerial Festival to compose new music based on the poet Wordsworth. What resulted was our 3 track EP called Progressions:

HopevsFear

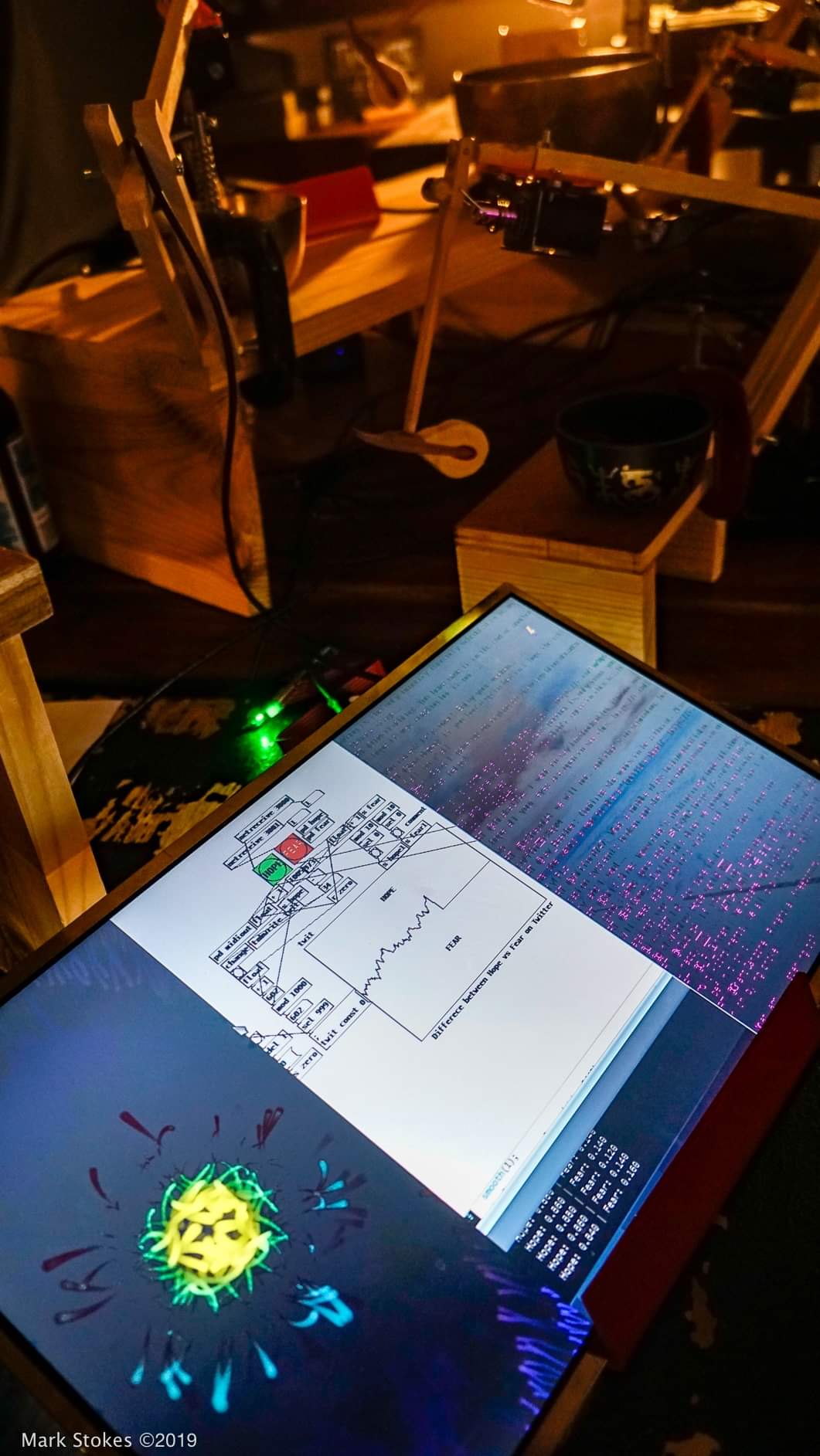

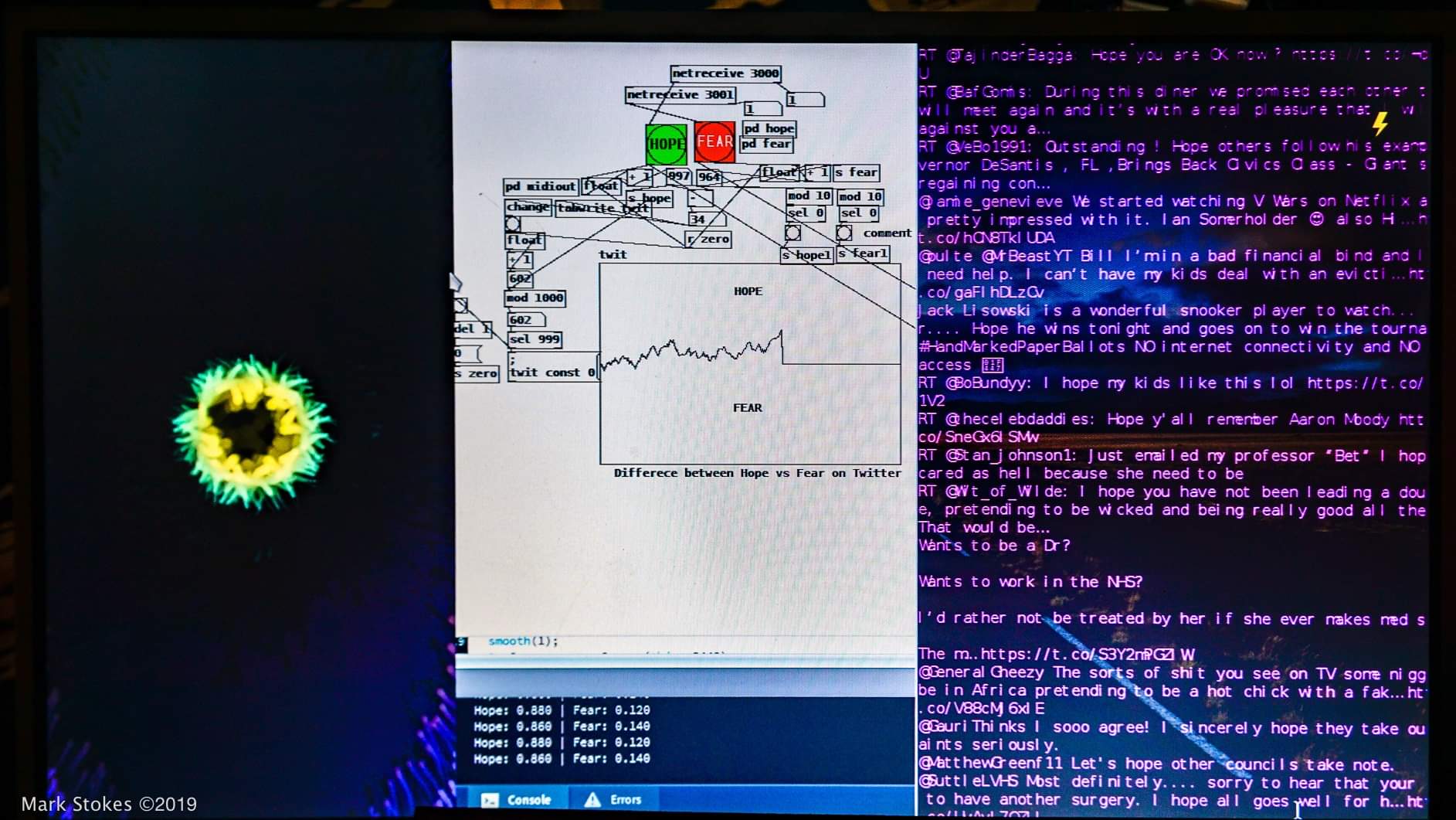

My newest iteration of twitter induced music is based on tracking Hope and Fear on a global scale.

I have a raspberry pi running a custom python script that searches for any mention of the words Hope or Fear in the entirety of twitter live. Whenever either of those words get mentioned, it will send a message to PureData and also print the tweet in the terminal. Puredata then tracks the instances of the mentions and graphs the difference between Hope or Fear. It also counts every 50 mentions, and if it is hopeful, it will play an ascending tone on 5 singing bowls, and if it is fearful it plays a descending tone. This is achieved by sending MIDI signals from puredata to a DADA machines Automat.

I also have some live visuals being generated in Processing created by the fantastic Jake Dubber. These visuals are also being controlled by the Hope vs Fear data. With Hope blooming from the centre and Fear spreading from the outer edges inwards.

This was first showed at the Tate Modern for their November 2019 Tate Late event with the Hackoustic community.

A variation of the installation creates its own sound file and envelope as time progresses, and can be listened to in this video:

Mini:Mu -Micro:Bit – PureData videos

Tutorial Videos

I’m giving video tutorials a whirl, have a ganders:

Proximity Om

This installation was made in collaboration with phenomenal artist Om Unit.

It uses a piece of physics known as Faraday’s Law of Electromagnetic Induction in order to transfer audio waves between two separate circuits dependant on their proximity to each other.

The track The Exodus was split into 3 parts, and each audio signal is being sent through a wire in the installation. The ‘pickup’ contains a custom circuit that will listen to those audio waves and make them audible. Where the wires cross, so do the audio signals. Allowing you to pick and mix parts of the track based on where you hold the pickup in physical space.

Check out Om Units work at his bandcamp here: https://omunit.bandcamp.com/

About

Vulpestruments is the project of artist Tom Fox that revolves around Sound Art practises, experimental instrument design, sonification of data and interactive installation design.

Tom is also the creative director for Hackoustic the London based community of musical hackers and inventors.

He also works for Music Tech Fest running their Sparks workshops.

He has run workshops, curated evets or performed at:

He hates talking in the third person.

MTF Podcast

Myself and my partner in crime Tim Yates were interviewed for the MTF Podcast about our work running Hackoustic. Check it out here:

Microbit Organ

I made a simple gestural controlled organ using Micro:bit and Puredata.

It tracks the roll angle using the microbits accelerometer which in turn controls the volume of a sinewave being generated in PureData.

Microbit Experiments

I’ve been experimenting with the Micro:bit microcontroller for a while now, and have realised I don’t have a central post showcasing what myself and other people have been up to. So here, are some of the things we’ve done with microbits:

In this Microbit experiment, Helen Leigh and myself connected her (at the time) prototype mini.mu glove up to PureData, which then triggered my DADA machines automat kit to strike some tubular bells based on gestural control!

In this experiment, Helen Leigh controls the tones being played through my inductive pendulum, while I alter the loop station to build melodies and textures.

Here, during the MTFFrankfurt creative Labs, I found a way to control the speed of a sample playback rate, and even to reverse the sample too. Here, I use the accelerometer to control the speed of the Amen Break.

At the same MTFFrankfurt, we tried using the microbit in combination with trombone! Here, Petter has a mimu glove on his playing hand and controls the synths on my laptop via the same accelerometer data as most of these other experiments.

At a recent Hackoustic Presents night, I put together this basic micro:bit organ, that uses a bunch of microbit connected to the same PureData patch. Here, they are controlling the volume levels of pre-determined sine waves. With one of them controlling the sample-playback rate of a bell .wav file.

I wrote up the method I use to connect microbit to puredata in this post here, feel free to use the code and share what you do with it!

MTFFrankfurt

I had the great honour of partnering up with Music Tech Fest to help coordinate and lead the MTF Labs at MTF Frankfurt.

The premise was that we would gather a whole host of music and tech experts of differing expertise together to work collaboratively in just 24 hours to build something new. We ended up with a a performance that included motion tracked musical generation, super low-latency capacitive touch sound synthesis, gravitational field synthesisers and a bunch of other awesome stuff.

You must be logged in to post a comment.